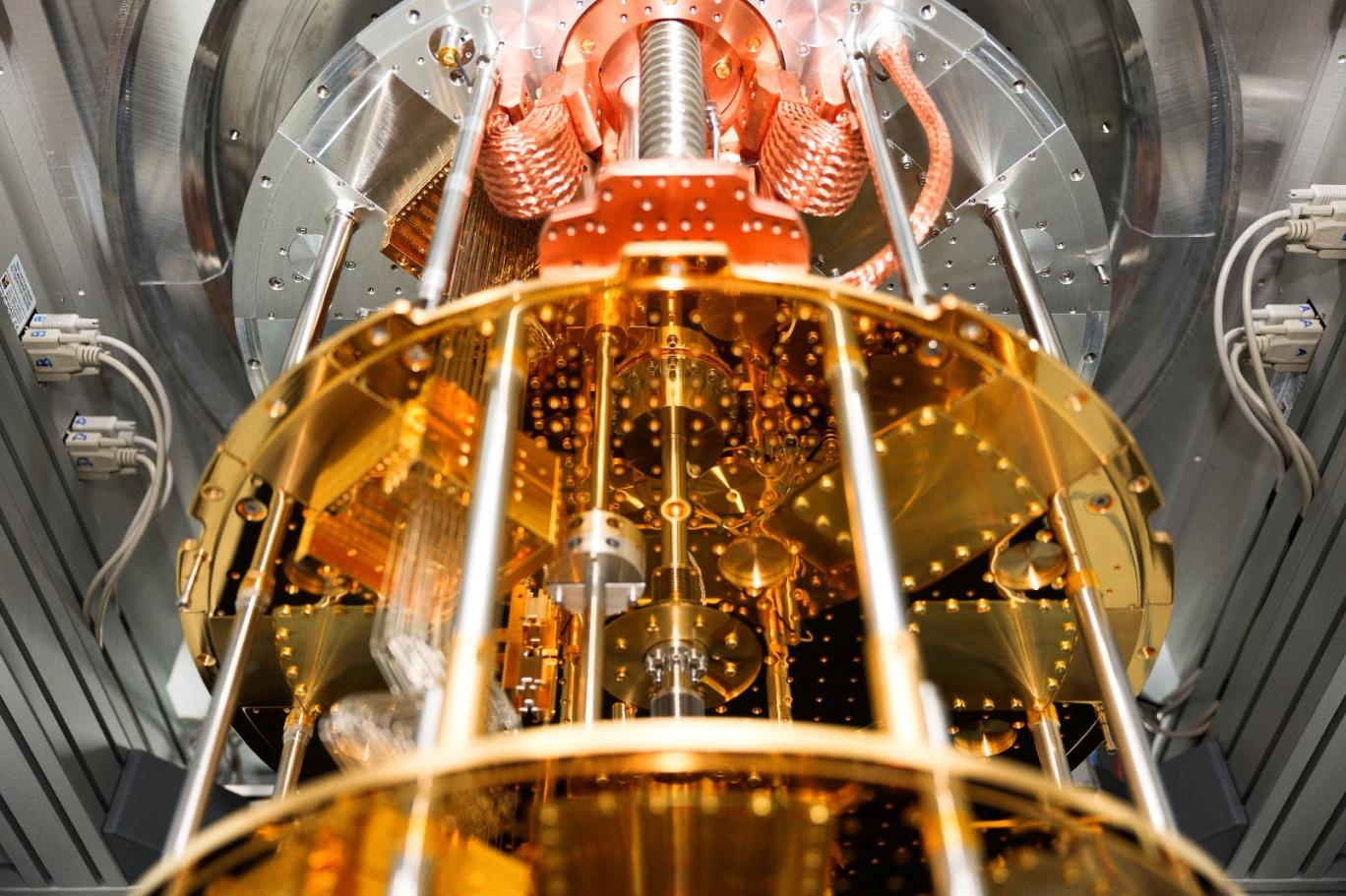

Microsoft and Quantinuum announced that they have succeeded in minimizing the errors of quantum computers with a new technology.

Quantum computers hold great promise for both performance and speed over traditional computers. But making too many mistakes is one of the biggest obstacles to the new technology.

Researchers often try to overcome this problem by adding too many qubits and using error correction techniques. Cubits, which stands for quantum bits, are the basic unit of information in quantum computers. Unlike bits in classical computers, which can only exist in one of two states, such as 0 or 1, qubits can be in more than one state at the same time. This phenomenon, called superposition, forms the basis of quantum computing.

To solve the problem of qubits failing at the slightest change in the environment, Microsoft applied a self-written error correction algorithm to Quantinuum’s physical qubits, resulting in 4 qubits from 30 physical qubits. With this method, called qubit virtualization, the researchers announced that they performed 14,000 experiments on the quantum computer without any errors.

Further tests showed that the error rate was one in 100,000.

Jason Zander, Microsoft’s Vice President of Strategic Missions and Technologies, told Reuters that this is the best rate they have ever achieved:

We ran more than 14,000 separate experiments without a single error – a rate 800 times better than anything on record.

Microsoft has announced plans to roll out the technology to its cloud computing customers in the coming months.

The team of computer engineers at Quantinuum and computer scientists at Microsoft published the paper detailing their work on the preprint server arXiv.

Quantum computers have the potential to pave the way for major breakthroughs in fields as diverse as health, the environment and artificial intelligence. This technology, which can go far beyond classical computers in terms of both performance and speed, could enable scientific calculations that would take millions of years with existing computers.

For example, quantum computing, which has the potential to model how chemical reactions take place, could eliminate the need to perform reactions in the lab when developing drugs.